Large language models are a sustaining innovation for Siri

Why it will be challenging to replace consumer voice assistants with ChatGPT

Many people assume that large language models (LLMs) will disrupt existing consumer voice assistants. Compared to Siri, while today’s ChatGPT is largely unable to complete real-world tasks like hailing an Uber, it’s far better than Siri at understanding and generating language, especially in response to novel requests.

From Tom’s Hardware, this captures the sentiment I see among tech commentators:

GPT-4o will enable ChatGPT to become a legitimate Siri competitor, with real-time conversations via voice that are responded to instantly without lag time. […] ChatGPT’s new real-time responses make tools like Siri and Echo seem lethargic. And although ChatGPT likely won’t be able to schedule your haircuts like Google Assistant can, it did put up admirable real-time translating chops to challenge Google.

Last year, there were rumors that OpenAI was working on its own hardware, which would open the possibility of integrating ChatGPT at the system level along the lines of the Humane Ai Pin. Would such a product be able to mount a successful challenge against Siri, Alexa, and Google Assistant?

After Apple’s WWDC keynote yesterday and seeing the updated Siri APIs, I think it’s more likely that LLMs are a sustaining innovation for Siri — a technological innovation that strengthens the position of incumbent voice assistants.

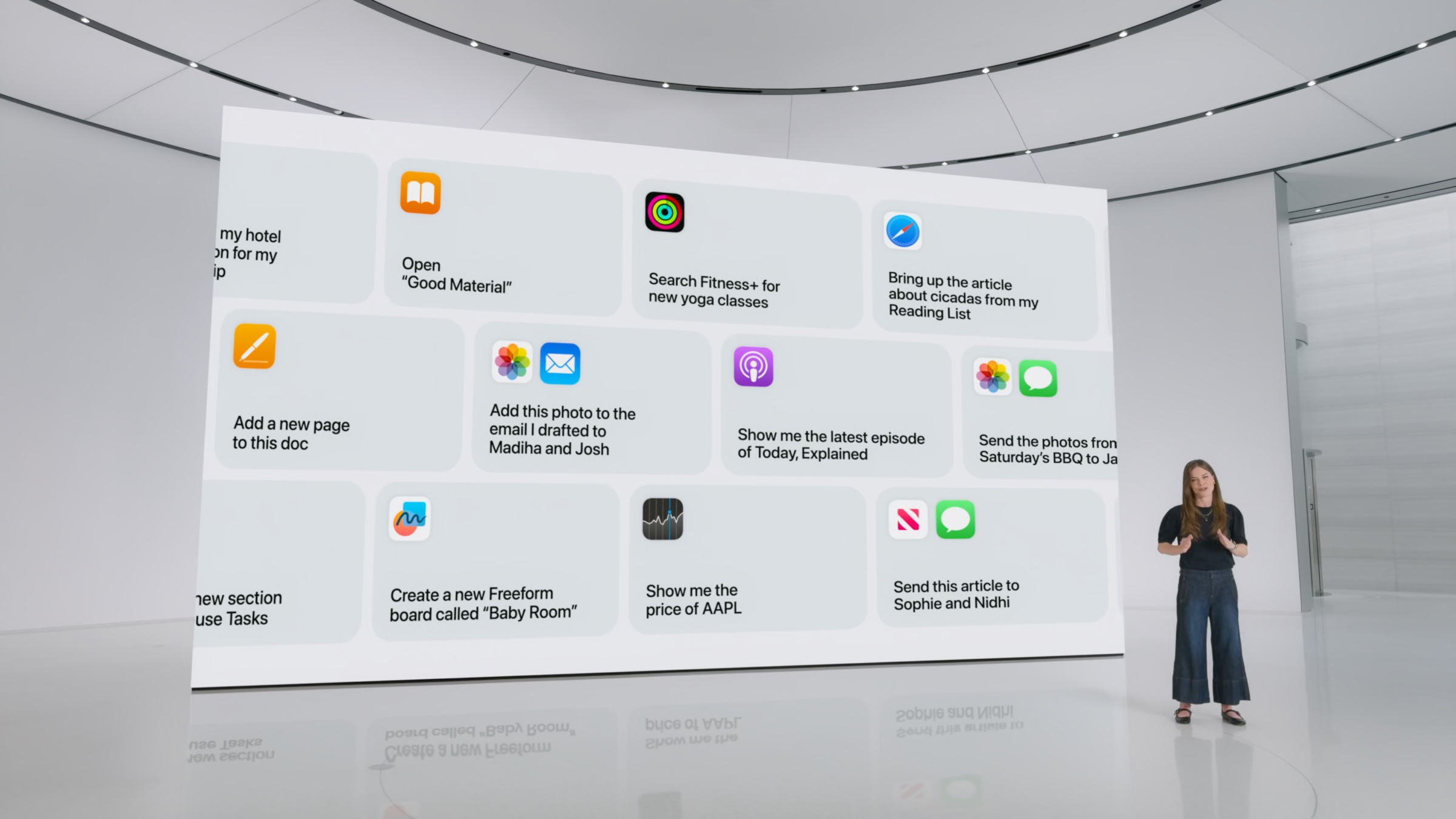

Apple promised to increase the number of ways in which Siri can take action in apps. Source: Apple.

Traditional voice assistants work by matching the user’s queries to a fixed set of intents. Pierce Freeman explains the general approach:

The previous generation of personal assistants had control logic that was largely hard-coded. They revolved around the idea of an intent — a known task that a user wanted to do like sending a message, searching for weather, etc. Detecting this intent might be keyword based or trained into a model that converts a sequence to a one-hot class space. But generally speaking there were discrete tasks and the job of the NLU pipeline was to delegate it to sub-modules.

Once the query has been matched to an intent, the next step is to “fill in the blanks” for any inputs needed by the intent:

If it believes you’re looking for weather, a sub-module would attempt to detect what city you’re asking about. This motivated a lot of the research into NER (named entity recognition) to detect the more specific objects of interest and map them to real world quantities.

city:San Franciscoandcity:SFtoid:4467for instance.Conversational history was implemented by keeping track of what the user had wanted in previous steps. If a new message is missing some intent, it would assume that a previous message in the flow had a relevant intent. This process of back-detecting the relevant intent was mostly hard-coded or involved a shallow model.

A natural outcome is that Apple is forced to develop an expansive and complicated system of intents because it is the only way to expand the assistant’s capabilities. In 2016, Apple also allowed third-party developers to integrate their apps’ functionality by providing intents through SiriKit. Once the developer defines the inputs and outputs, the intents could appear in Siri, the Shortcuts app, proactive notifications, etc. alongside first-party intents by Apple. Similar frameworks exist on other platforms: Android App Actions and Alexa Skills.

However, no matter how rich the intent library becomes, the overall user experience can still suffer if access is gated by a brittle intent-matching process: either (1) matching to the incorrect intent, or (2) after matching the correct intent, parsing the request parameters incorrectly. In my opinion, the intent matching stage is the primary source of users’ frustration with Siri.

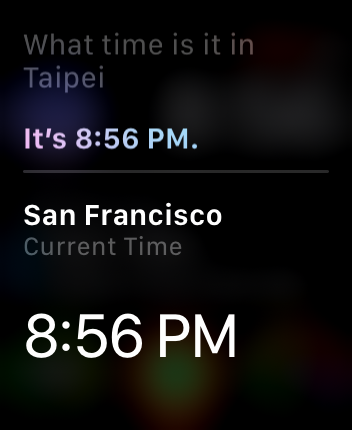

Incorrect named entity recognition by Siri.

Contrast this with ChatGPT plugins, a similar system that allows the model to interact with external APIs by determining which plugin might be relevant to the user’s request, then reading the plugin’s API specification to determine the input and output parameters. In other words, the intent matching is performed by an LLM. The generalist nature of LLMs seems to reduce brittleness. For example, when using the code interpreter plugin, the model can write arbitrary Python code and fix resulting runtime errors.

The main issue for challengers (OpenAI, Humane, and Rabbit) is the lack of third-party integrations to make their assistants helpful in consumers’ digital lives, extending beyond general knowledge tasks. For example:

- The Humane Ai Pin only streams music from Tidal, not Spotify nor Apple Music.

- The Rabbit R1 “large action model” is, in reality, just a few handwritten UI automation scripts for the applications shown in their demo. The system does not appear to generalize to unseen applications.

- In general, while companies working on UI agents have shown some limited demos, I’m not aware of any that run with high reliability and scale. Even if they achieve scale and generalization, their agents could be made less reliable by app developers using anti-scraping techniques because the developers prefer to own the customer relationship, or as leverage for future partnership negotiations. This type of system is probably a few years out at a minimum.

Without a large user base, developers have no incentive to port their apps, leaving the integration work to the platform owner, as in the cases of Humane and Rabbit. Meanwhile, Apple, Amazon, and Google each have a pre-existing app ecosystem. Their position as aggregators means developers are highly motivated to access the enormous installed base of iOS, Alexa, and Android devices.

Assuming LLM technology will become a commodity, the incumbents’ in-house LLMs ought to be able to provide decent intent matching and language skills. Combined with an expansive library of intents, it seems very possible that integrating LLMs will cement the incumbent voice assistants as the dominant platforms. Challengers might be better off building products in areas that don’t depend on third-party integrations for key functionality.